Ethical Dilemma Cafe Kicks Off Community Ethics In Tech Project

The following post was written by MozFest Community member Craig Steele and originally posted on Mozilla Festival website. Image Credits: Connor B. and Craig Steele.

How much do you value your personal information? Would you be willing to hand over some personal information in exchange for a free coffee? That’s exactly what I did when I visited a café where the currency is your data.

Being part of the Mozilla community

I’ve been a fan of Mozilla (and Firefox user) ever since I first joined the web. Then, in 2013, I was awarded a “Digital Makers Fund” grant from Mozilla, Nominet, and Nesta to grow CoderDojo, a network of volunteer-led coding clubs for young people in Scotland.

Since then I’ve continued to follow the Mozilla Foundation, and have joined the community every year at MozFest. At the most recent MozFest, my colleague Daniel and I led an interactive activity where we looked at how data science is used to defend rhinos from poachers

The Mozilla Festival is a key moment on my calendar. It’s a great way to connect with likeminded technologists and creatives. I always learn something new, and usually leave filled with ideas. It’s that excitement that drew me to take part in the Ethical Dilemma Café spin-off event.

My trip to the Ethical Dilemma Cafe

The Feel Good Club on Hilton Street in Manchester, was transformed into the Ethical Dilemma Café. Mozilla and the BBC’s Research and Development department worked together to create this event to get people thinking about data consent and privacy.

So what is it? The Ethical Dilemma Cafe is a cafe with a catch. Even before stepping inside we were warned we were consenting to have our personal data tracked in the café. By opening the door, we were agreeing to those terms and conditions.

Inside, there were microphones and cameras placed beside the tables; watching and listening to everything going on inside. Some of those cameras and microphones could be controlled remotely by visitors to the website.

To get the free coffee, Daniel scanned a QR code on his lanyard, and then logged into the “Coffee with Strings” app. This is the point where you have to answer a personal question, handing over sensitive details to get your free coffee. Once you’ve answered you get the virtual token to exchange at the till.

Being spied on while you sip a latte isn’t something you’d normally expect in a local coffee shop, but the café is a metaphor for today’s Internet. Often online we’re given something we really want – the latest music, news articles, entertaining videos on YouTube – but it’s not truly free – we’re trading some of our personal data in exchange for what we want.

Other things to explore in the café

As well as the free coffee, The Ethical Dilemma Café had a bunch of things to see and do. There were installations, talks, and workshops by BBC R&D, Lancaster University, Open Data Manchester CIC, and Northumbria University.

My highlights include Edge of Tomorrow, an arcade game by Lancaster University. This game explained some of the environmental effects that can be caused by cyber attacks.

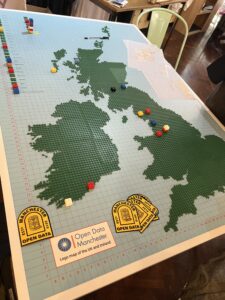

A data visualisation from Open Data Manchester got us to use lego blocks to plot our happy places. The coloured bricks representing our happiness levels, and where we placed them on the map corresponded with the place we were most happy.

Daniel and I crushed into The Caravan of the Future, an immersive design showcasing what the living room of the future might look like. Using voice assistants, we were able to speak directly to the caravan and it adjusted the lights, temperature, and environment to suit us. Based on the way we looked and our facial expressions it even tried to recommend a TV show it thought we might enjoy.

Want to help school pupils fight biased algorithms?

This research trip was the perfect start to our own new education project: we’re creating an “Ethics in Tech” interactive learning resource that will help primary school pupils learn about racist, sexist, and ageist computer algorithms. We need to prepare the next generation of digital leaders to understand the dangers of biased algorithms. To fight inequality, they need to know how to spot them, and how to tackle them.

As part of the research and development phase, I want to connect with technology professionals across the country who have experience creating algorithms that directly affect people. Get in touch with me if you want to learn more.

The “Ethics in Tech” project is supported by Digital Xtra Fund, a Scottish charity that helps enable extracurricular digital tech activities for young people, and is funded by the Scottish Government.

The Ethical Dilemma Café challenged me to think about the value of personal data, and how data and algorithms shape our world today. It was fun taking part in this small scale event, and it definitely got me more excited for next year’s Mozilla Festival.

About the Author

Craig Steele is a computer scientist, educator, published author, and creative technologist, who helps people develop digital skills in a fun and creative environment. His company, Digital Skills Education, offers digital skills training across Scotland and internationally.